generative model

- Discriminative Model: Learn a probability distribution

- Generative Model: Learn a probability distribution . Sample to generate new data

- Conditional Generative Model: Learn . Generate new data conditioned on input labels

Example

could be an image, is the image's class.

Notation

Probability density functions (PDFs) and probability mass functions (PMFs), also simply called probability distributions.

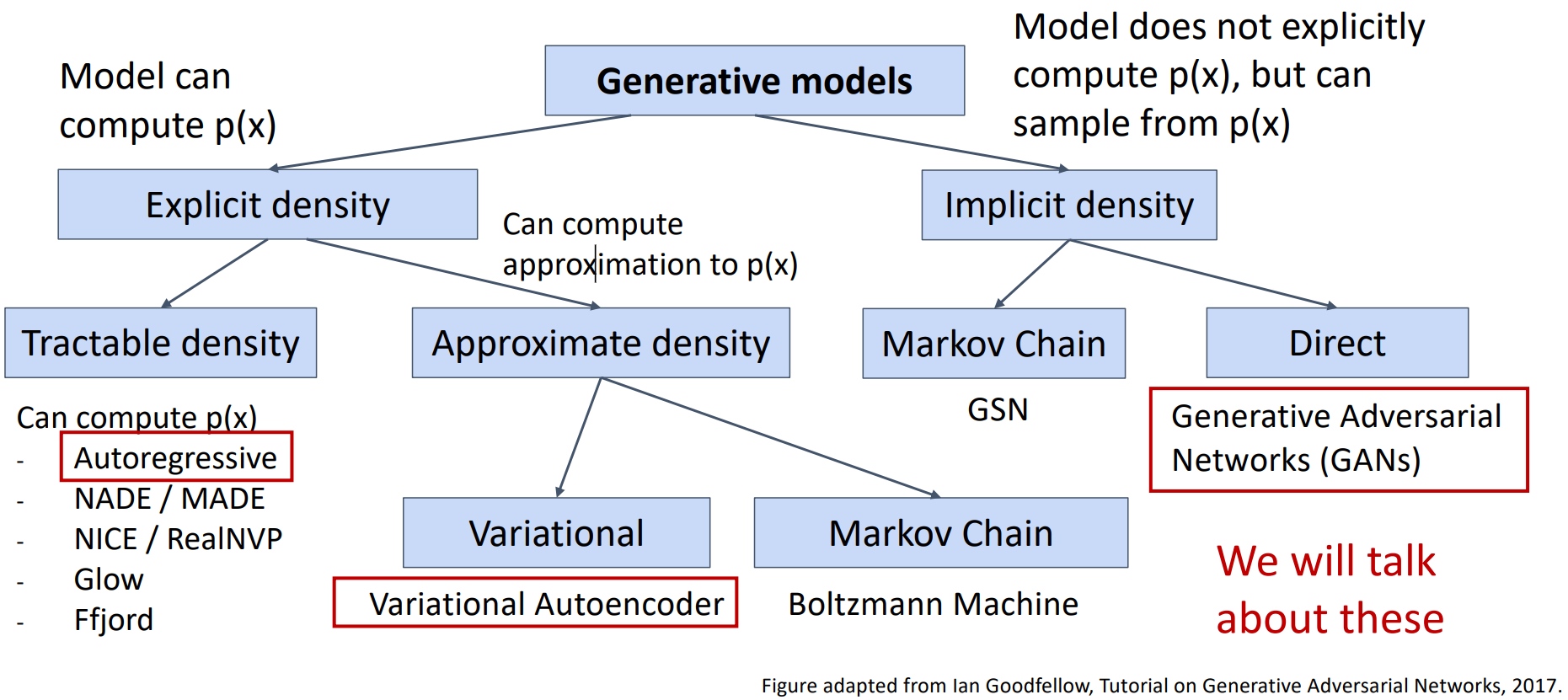

Taxonomy of Generative Models

Variational Autoencoders

Let be a random sample from an unknown distribution . We also call an observed variable. We assume that there exists an unobserved (latent) variable that generates .

How to generate new data

After training, sample new data like this:

- Sample from prior . e.g. Gaussian unconditoned on ,

- Sample from conditional

How to train the model

Basic idea 1: maximize the likelihood of data

If we can observe for each during training, we can train this model by maxmizing the likelihood of each conditioned on its observed . In other words, we can train a conditional generative model .

But we can't observe , we need to maximize the marginal likelihood:

Basic idea 2: